| Jan 2020 - Jul 2022 | Partner/Techical Lead PureTech Capital Management LLC Palo Alto, USA |

| Apr 2016 - Mar 2020 | Chief Software Architect ModelOp Inc Chicago, USA |

| Sep 2012 - Mar 2016 | Founder/CTO Cloudozer LLP Kyiv, Ukraine |

| Jan 2012 - Aug 2012 | Founder/CTO fi-matrix Software Inc Houston, USA |

| Jan 2011 - Jan 2013 | CTO Barque Energy Group Quebec, Canada |

| Jul 2008 - Aug 2009 | General Manager Sitronics Labs Moscow, Russia |

| Jul 2006 - Aug 2009 | Director, Advanced R&D JSC Sitronics Moscow, Russia |

| 2006 - 2008 | MSE, Executive Master's in Technology Management (EMTM) University of Pennsylvania, SEAS (co-sponsored by Wharton School) Philadelphia, USA |

| 1989 - 1995 | Master of Science, Applied Mathematics Moscow Institute of Physics and Technology (MIPT) Moscow, Russia |

The implemented version of the system includes gathering data from multiple data providers, training models, executing trading strategies, checking limits, and reporting.

The system is implemented in Go, Scala, Python, and Julia. It has been deployed to the Google Cloud. The system is serverless - there are almost no permanent components.

It handles terabytes of data and many millions in trading volume.

| Idea | 10% |

|---|---|

| Requirements | 50% |

| Implementation | 90% |

| Maintenance | 100% |

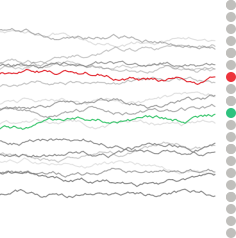

The signal detector aims at verifying if a time series is predictable with respect to another time series. The idea is to make the signal detector sufficiently general and apply it to all available public time series even vaguely related to markets.

The detector is implemented in Julia. It can consume input of almost any Julia data type. This provides for rapid testing of a new data source as the the effort of making it compatible with the signal detector is relatively small.

The signal detection algorithm itself is novel and proprietary.

| Idea | 50% |

|---|---|

| Requirements | 50% |

| Implementation | 50% |

| Maintenance | 50% |

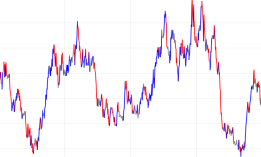

The backtesting framework estimates performance of trading strategies as if they run in the past. It serves as a gatekeeper preceding strategy deployment. The deployment platform requires for the running strategy to have a recent backtesting report. It compares the actual performance of the strategy with the expectations from the report.

The framework has a few unique features. For example, it can test strategies packaged as microservices in the preparation for deployment. This removes any discrepancies between what is being tested and what runs in production. For certain strategies trading on the daily cadence, the exectution time may be important. In such cases the framework uses tick-level data to pinpoint the most likely execution time and price.

The backtesting framework is implemented in Go as a series of command-line tools.

| Idea | 50% |

|---|---|

| Requirements | 50% |

| Implementation | 100% |

| Maintenance | 100% |

This model of behaviour of market participants assumes that the market moves mostly from closing existing positions and the decision to close the position depends on the difference between opening and the current price. A trader may decide to exit the position because the profits are high (greed) or because the losses are unsustainable (fear). Exiting positions is associated with the sense of urgency. The urgency leads to the price impact.

The propensity to exit model has a compact discrete formulation yet it resisted all attempts at finding closed form solutions. Computer simulations have shown that there must exist solutions with prices trending at a stready rate.

The model is a promising approach to understanding the dynamics of markets lacking fundumentals, such as cryptocurrencies.

| Idea | 100% |

|---|---|

| Requirements | 100% |

| Implementation | 100% |

| Maintenance | 100% |

In the course of the project I explored a new indicator - a 'time traveler gain'. The indicator calculates the maximum gain that can be realized with the perfect knowledge of future prices without a leverage.

It happened that the trading costs make calculation of the time traveler gain an NP-complete problem. At the same time an approximate algorithm equipped with a few heuristics yields near-optimal results.

The time traveler gain is yet another indicator of market volatility. It seems more to the point than the standard deviation as a measure of volatility.

| Idea | 80% |

|---|---|

| Requirements | 80% |

| Implementation | 80% |

| Maintenance | 100% |

The platform 'operationalizes' the lifecycle management of analytic models.

I have implemented the core components of the initial version of the platform. One of then - the engine - could control models written in Python, R, Java, and C. It also allowed uniform access to a range of data sources, such as Kafka, Hadoop, S3, etc using a range of data formats.

The engine has been implemented in Erlang with bits of other languages. It has been highly-tuned for performance. For example, the Avro schema would be compiled into a set of Erlang routines to provide for the fastest decoding possible.

The engine had full control over the execution flow. It did not use third-party libraries which could start new processes, etc. Many data access components were custom-made: a Kafka client, an HDFS client, AWS authentication.

The initial version of the platform resulted in a series of pilot projects at Fortune 500 companies.

| Patent No | Title |

|---|---|

| 11,003,486 | Dynamically configurable microservice model for data analysis using sensors |

| 10,891,151 | Deployment and management platform for model execution engine containers |

| 10,860,365 | Analytic model execution engine with instrumentation for granular performance analysis for metrics and diagnostics for troubleshooting |

| Idea | 0% |

|---|---|

| Requirements | 10% |

| Implementation | 80% |

| Maintenance | 50% |

Membrane is yet another attempt to simplify passing data between ML components written in different languages. It focuses on the mathematical meaning of data. For example, a number 3 (three) is treated as such regardless of it being represented as an unsigned byte in the producer and as 64-bit IEEE754 floating-point value in the consumer.

Membrane targets local interfaces, not networking. A producer and consumer are extended with autogenerated adapters. Plugging a producer into a consumer boils down to linking together corresponding object files.

Membrane does not introduce a new intermediate format in a contrast to similar mechanisms, such as Protocol Buffers. There are no serialization/deserialization steps.

Membrane compiler has been implemented in OCaml. The initial version generated adapters for R, Java, and C.

| Idea | 100% |

|---|---|

| Requirements | 100% |

| Implementation | 100% |

| Maintenance | 100% |

(PFA is a open format for representation of analytic models based on JSON)

The compiler has been developed in the course of a daring project to convert a large SAS model to a different format. The SAS model had >10K lines of code and used a substantial subset of the SAS language.

The SAS-to-PFA compiler has been implemented in OCaml. The compiler proved to be complete enough to translate the target model. The converted model has been producing results identical to the orginal.

An interesting feature of the compiler is speculative parsing. The SAS language does not have proper

keywords. For example, the following is a valid SAS statement:

IF ELSE = 1 THEN ELSE = 2;

The compiler had to explore multiple ways to parse the SAS code to build a valid syntax tree.

| Idea | 10% |

|---|---|

| Requirements | 50% |

| Implementation | 100% |

| Maintenance | 100% |

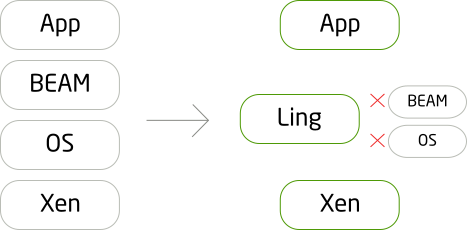

It is a massive project hard to describe succinctly. The new Erlang VM - called LING - has been implemented from scratch. LING is the functional equivalent of the standard Erlang VM (BEAM).

LING is a unikernel - it does not require an operating system. It boots directly as a Xen instance. Essentially, Erlang processes become OS processes, Erlang drivers become OS drivers, Erlang shell - the OS shell.

The LING's optimization target is the startup time. I tried hard to shrink the time since the beginning of the boot sequence until the application code starts to execute. As a result, it is possible to spin up a LING-based Xen instance within 100ms. At the time, a Linux-based Xen instance would take dozens of seconds to boot.

LING uses a unique approach to specialization of the instruction set of the Erlang VM. The build process analyzes a large body of Erlang code to identify widespread instruction variants. For example, if the `load x1, 13` instruction happens often, then a new opcode is allocated specifically for loading the value 13 to the register x1. Depending on the analyzed Erlang code, the low-level instruction set of Ling could be quite different.

Later, LING has been ported to run on Linux and a baremetal MIPS microcontroller.

github.com/cloudozer/ling| Idea | 100% |

|---|---|

| Requirements | 100% |

| Implementation | 90% |

| Maintenance | 90% |

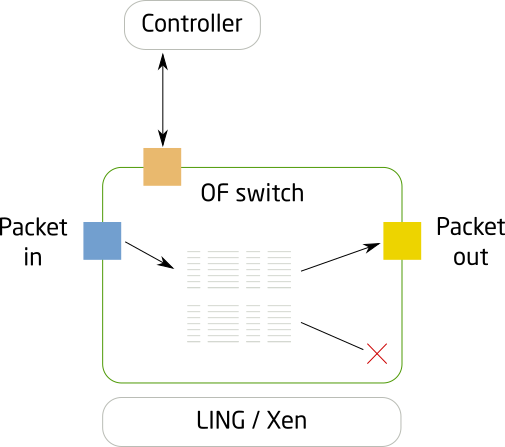

The OpenFlow softswitch has been implemented in pure Erlang targeting a non-standard Erlang VM (LING). A few components have been added to LING in the course of the project. One of them, a netmap driver, allowed the Erlang code to talk directly to the network interface bypassing the kernel.

OpenFlow rules such as 'forward MPLS packets with QoS set to 3 to port 2' were compiled into Erlang pattern matching expressions. The performance of the softswitch has been further enhanced by figuring out the 'fast path' and avoiding memory allocations along the path.

As a result, the time the packet spent in the switch has been reduced to 1.5μs, the latency hard to believe given the dynamic nature of Erlang. The software switch passed all conformance tests for versions 1.2 and 1.3 of the OpenFlow specification.

github.com/FlowForwarding/lincx| Idea | 10% |

|---|---|

| Requirements | 50% |

| Implementation | 100% |

| Maintenance | 50% |

The pipeline has been developed in collaboration with University of Chicago. The goal was to shrink the time it takes to find starting positions of all known genes within newly-published versions of the human genome.

We have explored multiple approaches to fuzzy matching trying to surpass the performance of widely used libraries/algorithms.

The pipeline has been implemented in Erlang in the attempt to harness its distributed mode and pattern matching.

I helped with the design of alignment algorithms and the performance tuning of the Erlang code.

| Idea | 0% |

|---|---|

| Requirements | 20% |

| Implementation | 20% |

| Maintenance | 0% |

maxim.kharchenko@gmail.com

maxim.kharchenko@gmail.com